DATE: 2017-2018

PRODUCT: Wearable Audio AR device with computer vision for Meta's Building8.

ROLE: Led the UX and the design of Audio AR, exploring different form factors, defining use cases, usability requirements, the companion app, and managing supporting agencies.

IMPACT: The prototypes and use cases from audio AR helped define the design of Ray-Ban Stories smart glasses a few years later.

Exploring smart audio devices that free you from your screen, so you can focus on the things that matter

One day we'll have full mixed reality, augmenting the entire world right in front of you. It will replace your phone, computer, and many physical devices. But that day is a few years away. This wearable audio AR product is about exploring what comes before that day.

Working at Meta’s innovation center called Building 8, we explored using audio as an intermediate milestone for AR experiences to gain actual use knowledge and brand association.

Note: definitions of “Full AR” and “Full MR” changed over time and may also differ among companies. CV = Computer Vision.

Experience North Star:

"Like going on a walk with your friends, you experience the world together."

"Like going on a walk with your friends, you experience the world together."

We knew from the start the complexity of this challenge. Not only technically but socially. We all learned from the failure of Google Glass a few years before. The product must have aesthetics and etiquette in mind to gain social acceptability.

Be beautiful, be polite.

And, of course, be useful. I led, with close partnership with the research team, an in-depth understanding of the use cases and which ones would have a satisfactory experience with audio-only feedback.

Below is a summary table with the 46 scenarios we developed, how each phase of AR would fulfill the need, and how they map against user and market interest. This enabled the team to prioritize and define the minimal hardware and software feature set.

We developed 46 use case scenarios to understand the opportunity space.

Prototyping form factor and usability

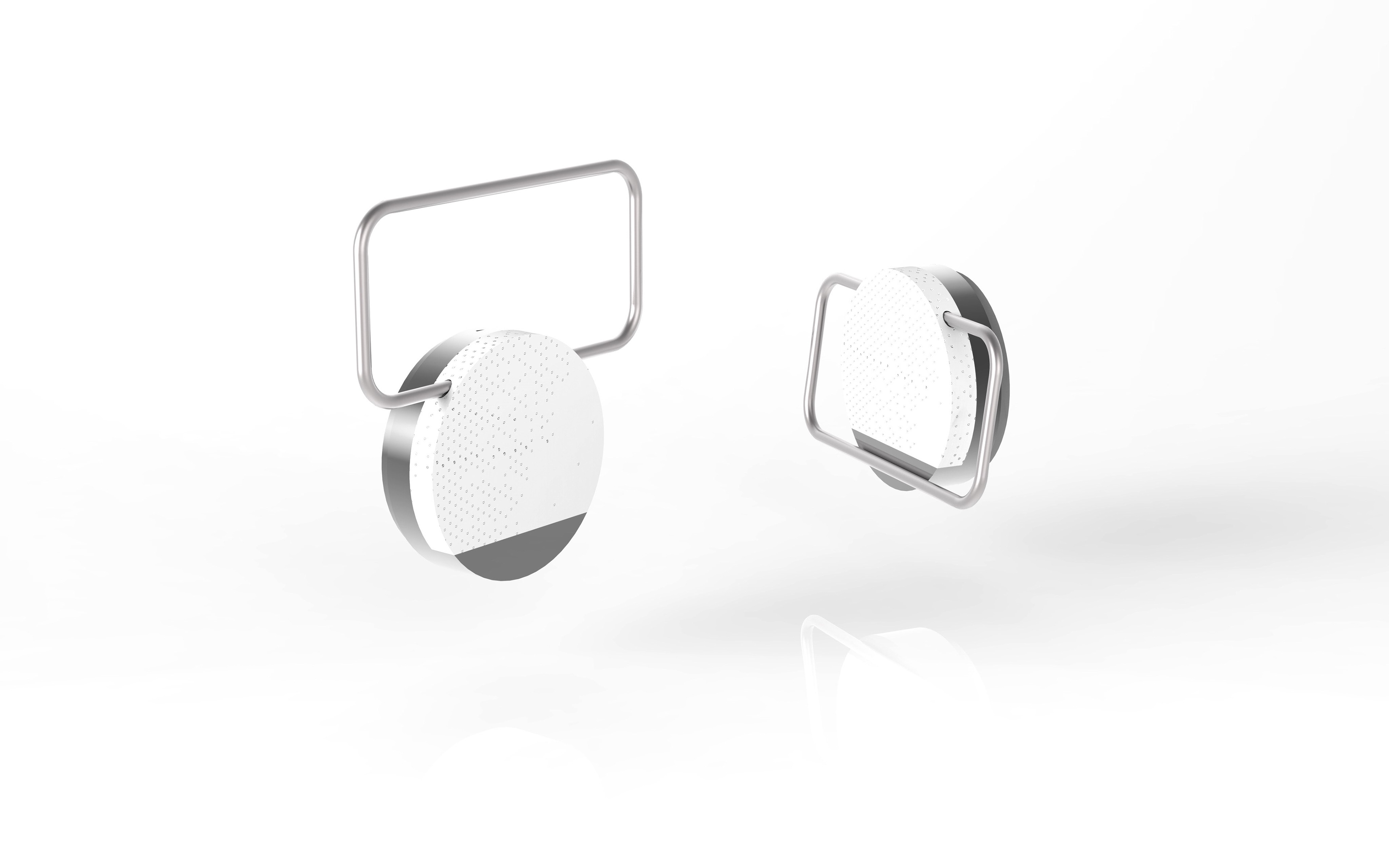

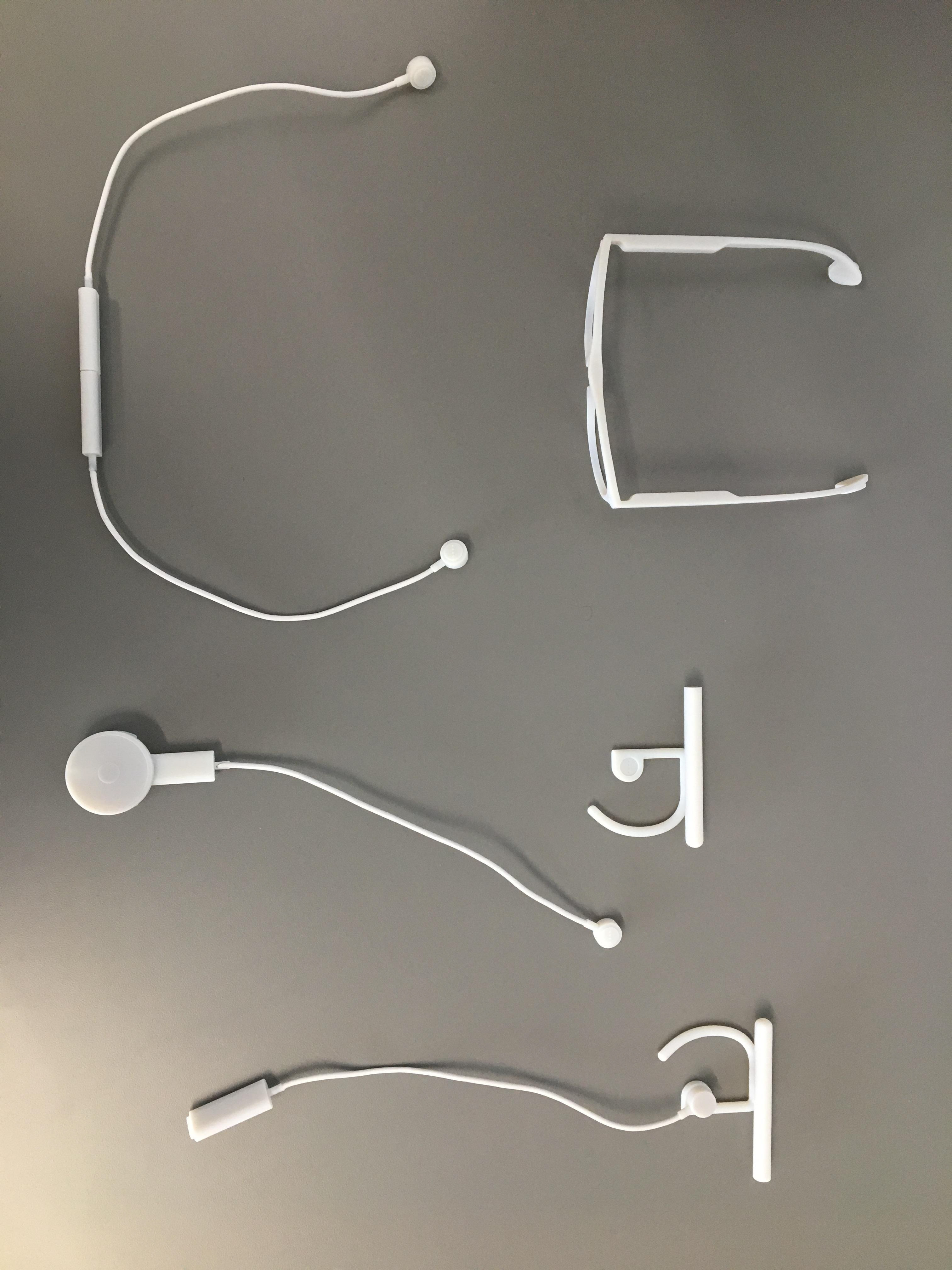

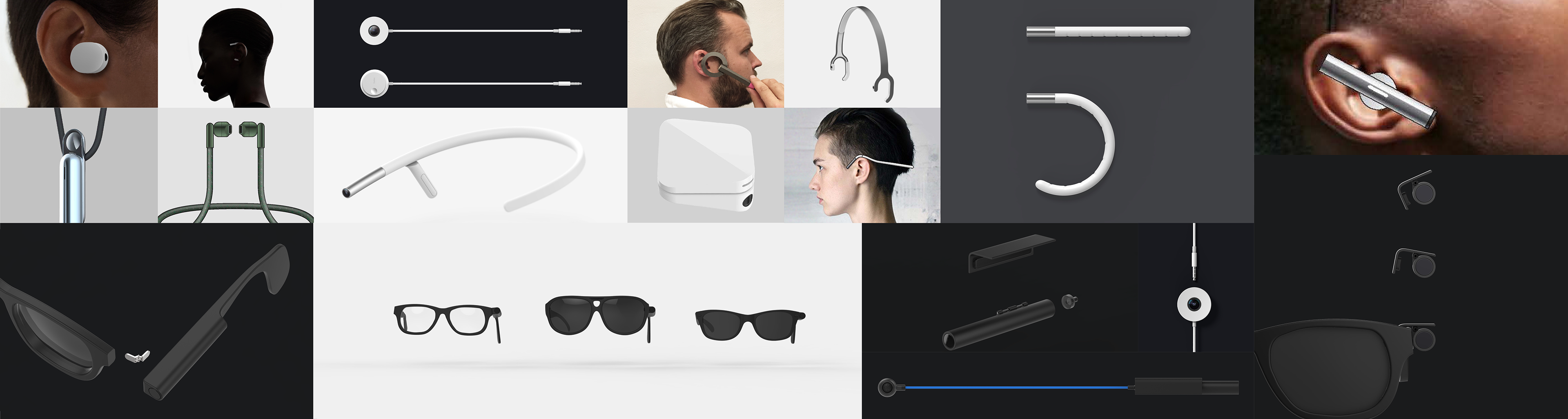

We created 67 industrial design mockups, exploring different form factors. We analyzed ergonomics, weight, and how each form constrains or enables experiences.

Audio-only AR with computer vision faced the challenge of requiring a screen for visual cues or menu selections. There was clear potential for hands-free interactions and image recognition for context understanding, improving Assistant and notifications. A quote from research: “It is intriguing that you could get that information while the phone is in the bag. But, I haven’t seen a hands-free that’s interesting.”

Audio-only AR with computer vision faced the challenge of requiring a screen for visual cues or menu selections. There was clear potential for hands-free interactions and image recognition for context understanding, improving Assistant and notifications. A quote from research: “It is intriguing that you could get that information while the phone is in the bag. But, I haven’t seen a hands-free that’s interesting.”

Visual AR with non world-lock (SLAM) technology felt distracting, and there were social and security concerns. The display felt like just another source of notifications, which was not transformative enough when compared to smartphones. Quote: “Getting visuals could distract you from what you’re doing.”

brooch with CV

earbuds

over the ear

brooch with speaker

3D prints

material explorations

Prototypes with cameras

Renderings of wearables with cameras

Prototypes with displays, like spectacles or a loupe.

Functional prototypes

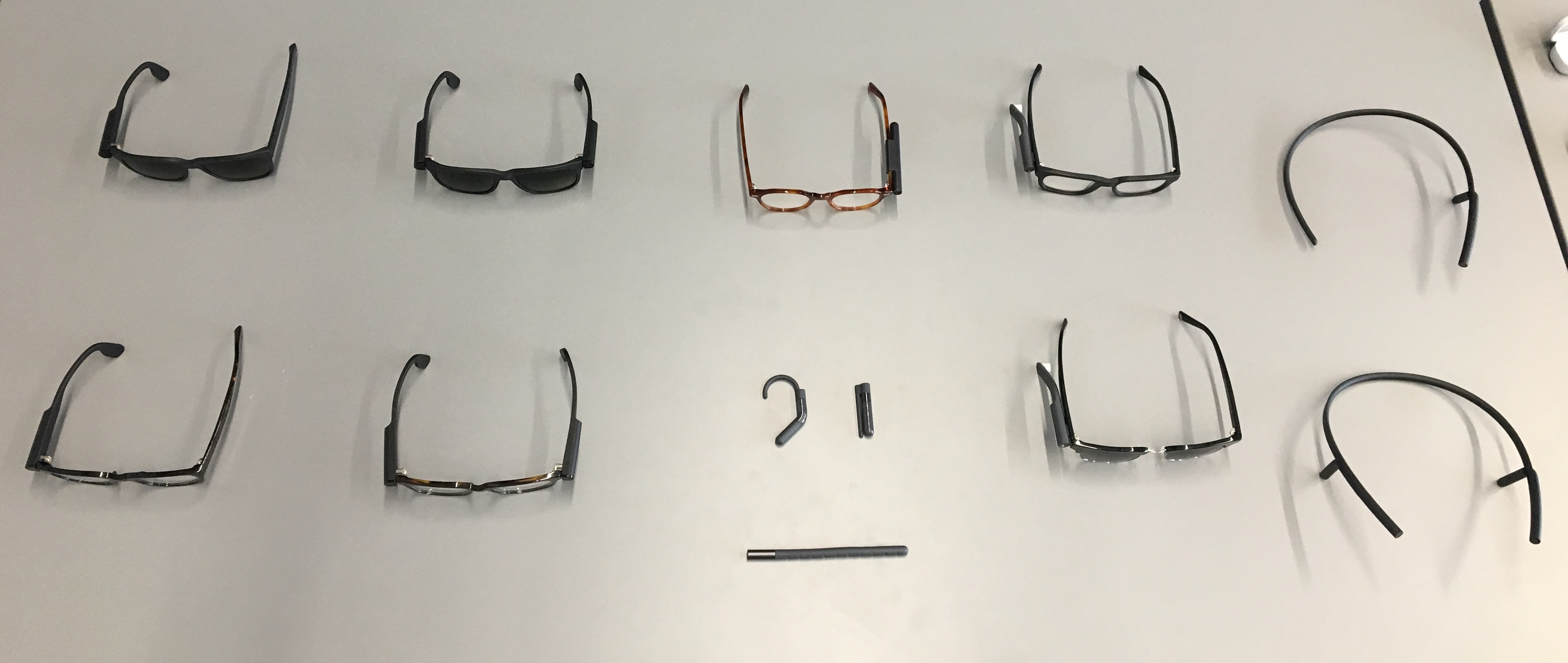

From the form factor and usability lessons, we narrowed the explorations to “Audio AR with computer vision” on glasses and brooch form factors.

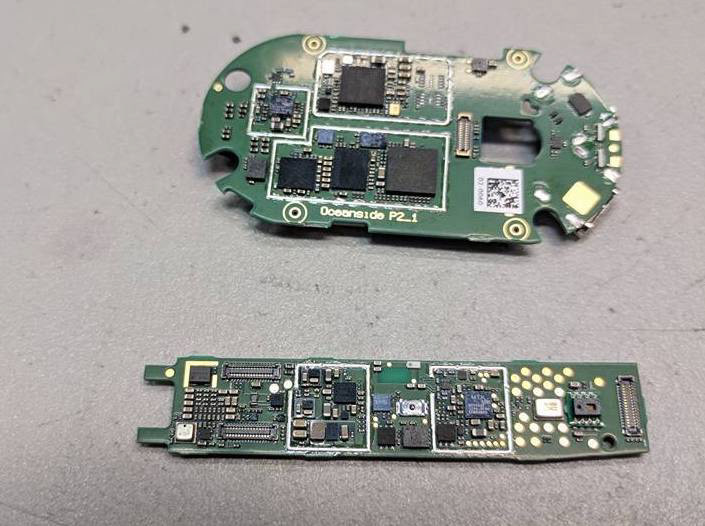

We created more than 20 fully functional prototypes for data gathering and usability testing.

I co-led the creation of a companion app for control of settings, debugging, and a feed for visualization of activity, captured images, and audio commands. We used the app internally for user research and refining the functional prototypes.

2 cameras and 8 microphones placed on the glasses for contextual understanding.

compared to Google Clip

compared to Google Clip

brooch circuit board

brooch circuit board

brooch prototype

brooch prototype

More user research, and the companion app for for visualization of activity, captured images, and audio commands.

Side-by-side to commercial Ray-Ban glasses.

Left: note the stereo bone conduction audio rendering transducers.

Ray-Ban Stories 2021 launch

I was not part of this launch (due to a re-org, the project moved to a different group in 2018), but many of the features and use cases I worked on carried over to the final product.